hadoop系列(一) 有更新!

- 37套精品Java架构师高并发高性能高可用分布式集群电商缓存性能调优设计项目实战视教程 置顶! 有更新!

- 一、环境安装配置

- (一)防火墙、hostname、host

- (二)ssh免密码登录

- (三)安装jdk

- (四)安装hadoop

- 1. 下载hadoop安装包

- 2. 解压到指定目录

- 3. 配置环境变量

- 4. 修改配置文件

- 5. 格式化NameNode

- 6. 启动

- 7. 查看运行状态

- 8. 浏览器查看

37套精品Java架构师高并发高性能高可用分布式集群电商缓存性能调优设计项目实战视教程 置顶! 有更新!

一、环境安装配置

(一)防火墙、hostname、host

所有机器关闭防火墙、修改hostname、配置host

|

[root@master ~]# service iptables stop iptables:将链设置为政策 ACCEPT:filter [确定] iptables:清除防火墙规则: [确定] iptables:正在卸载模块: [确定] [root@master ~]# service iptables status iptables:未运行防火墙。 [root@master ~]# hostname master [root@master ~]# vi /etc/sysconfig/network NETWORKING=yes HOSTNAME=maste [root@master autossh]# vi /etc/hosts 127.0.0.1 localhost localhost.localdomain localhost4 localhost4.localdomain4 ::1 localhost localhost.localdomain localhost6 localhost6.localdomain6 192.168.211.132 master 192.168.211.133 slave1 192.168.211.134 slave2 192.168.211.135 slave3 |

(二)ssh免密码登录

initSshFile.sh

|

#!/bin/bash ssh-keygen -q -t rsa -N "" -f /root/.ssh/id_rsa cat /root/.ssh/id_rsa.pub > /root/.ssh/authorized_keys chmod go-rwx /root/.ssh/authorized_keys |

nologinssh.ssh

|

#!/bin/bash /boom/docs/autossh/autossh.sh root 123456 master /boom/docs/autossh/autossh.sh root 123456 slave1 /boom/docs/autossh/autossh.sh root 123456 slave2 /boom/docs/autossh/autossh.sh root 123456 slave3 |

autossh.sh

|

#!/usr/bin/expect set timeout 10 set username [lindex $argv 0] set password [lindex $argv 1] set hostname [lindex $argv 2] spawn ssh-copy-id -i /root/.ssh/id_rsa.pub $username@$hostname expect { #first connect, no public key in ~/.ssh/known_hosts "Are you sure you want to continue connecting (yes/no)?" { send "yes\r" expect "password:" send "$password\r" } #already has public key in ~/.ssh/known_hosts "password:" { send "$password\r" } "Now try logging into the machine" { #it has authorized, do nothing! } } expect eof |

1. 所有机器先执行initSshFile.sh

|

[root@master autossh]# ./initSshFile.sh -bash: ./initSshFile.sh: 权限不够 [root@master autossh]# chmod 777 ./* [root@master autossh]# ./initSshFile.sh [root@master autossh]# ll 总用量 12 -rwxrwxrwx. 1 root root 709 6月 13 2016 autossh.sh -rwxrwxrwx. 1 root root 222 4月 28 13:17 initSshFile.sh -rwxrwxrwx. 1 root root 209 4月 28 21:39 nologinssh.sh |

2. 修改master机器上./nologinssh.sh,并复制到各个slave机器上

|

[root@master autossh]# vi nologinssh.sh #!/bin/bash /boom/docs/autossh/autossh.sh root 123456 master /boom/docs/autossh/autossh.sh root 123456 slave1 /boom/docs/autossh/autossh.sh root 123456 slave2 /boom/docs/autossh/autossh.sh root 123456 slave3 |

3. 所有机器分别执行/usr/program/shell/nologinssh.sh

|

[root@master autossh]# ./nologinssh.sh ./nologinssh.sh: /boom/docs/autossh/autossh.sh: /usr/bin/expect: bad interpreter: 没有那个文件或目录 ./nologinssh.sh: /boom/docs/autossh/autossh.sh: /usr/bin/expect: bad interpreter: 没有那个文件或目录 ./nologinssh.sh: /boom/docs/autossh/autossh.sh: /usr/bin/expect: bad interpreter: 没有那个文件或目录 ./nologinssh.sh: /boom/docs/autossh/autossh.sh: /usr/bin/expect: bad interpreter: 没有那个文件或目录 [root@master autossh]# yum install expect Loaded plugins: fastestmirror, refresh-packagekit, security Loading mirror speeds from cached hostfile [root@master autossh]# ./nologinssh.sh spawn ssh-copy-id -i /root/.ssh/id_rsa.pub root@master The authenticity of host 'master (192.168.211.132)' can't be established. RSA key fingerprint is 2a:30:e3:2b:c9:f6:61:30:f9:eb:ae:31:40:65:b4:cf. Are you sure you want to continue connecting (yes/no)? yes |

4. 所有机器相互测试免登录

|

[root@master autossh]# ssh slave1 Last login: Fri Apr 28 21:06:07 2017 from 192.168.211.1 [root@slave1 ~]# exit logout Connection to slave1 closed. [root@master autossh]# ssh slave2 Last login: Fri Apr 28 21:06:27 2017 from 192.168.211.1 [root@slave2 ~]# exit logout Connection to slave2 closed. [root@master autossh]# ssh slave3 Last login: Fri Apr 28 21:06:44 2017 from 192.168.211.1 [root@slave3 ~]# exit logout Connection to slave3 closed. [root@master autossh]# |

(三)安装jdk

1. 下载jdk安装包

2. 解压到指定目录

|

[root@master softs]# ll 总用量 181192 -rw-r--r--. 1 root root 185540433 4月 28 14:08 jdk-8u131-linux-x64.tar.gz [root@master softs]# tar -zxf jdk-8u131-linux-x64.tar.gz -C /usr/program/jdk [root@master softs]# cd /usr/program/jdk [root@master jdk]# ll |

3. 配置环境变量

|

export JAVA_HOME=/usr/program/jdk export HADOOP_HOME=/usr/program/hadoop export PATH=$JAVA_HOME/bin:$HADOOP_HOME/bin:$PATH [root@master boom]# source /etc/profile [root@master boom]# java 用法: java [-options] class [args...] (执行类) 或 java [-options] -jar jarfile [args...] (执行 jar 文件) 其中选项包括: |

(四)安装hadoop

1. 下载hadoop安装包

http://apache.fayea.com/hadoop/common/hadoop-2.7.3/hadoop-2.7.3.tar.gz

2. 解压到指定目录

|

[root@master softs]# cd /boom/softs [root@master softs]# mkdir /usr/program/hadoop [root@master softs]# tar -zxf hadoop-2.7.3.tar.gz -C /usr/program/hadoop |

3. 配置环境变量

|

export JAVA_HOME=/usr/program/jdk export HADOOP_HOME=/usr/program/hadoop export PATH=$JAVA_HOME/bin:$HADOOP_HOME/bin:$PATH |

4. 修改配置文件

在主节点上如下修改各个配置文件,并scp到各个从节点。

1) hadoop-env.sh

|

#export JAVA_HOME=${JAVA_HOME} export JAVA_HOME=/usr/program/jdk |

2) yarn-env.sh

|

# export JAVA_HOME=/home/y/libexec/jdk1.6.0/ export JAVA_HOME=/usr/program/jdk |

3) hdfs-site.xml

|

<configuration> <property> <name>dfs.replication</name> <value>3</value> </property> <property> <name>dfs.permissions</name> <value>false</value> </property> <property> <name>dfs.client.block.write.replace-datanode-on-failure.enable</name> <value>true</value> </property> <property> <name>dfs.client.block.write.replace-datanode-on-failure.policy</name> <value>NEVER</value> </property> </configuration> |

4) core-site.xml

|

<configuration> <property> <name>fs.defaultFS</name> <value>hdfs://master:9000</value> </property> <property> <name>hadoop.tmp.dir</name> <value>/hdfs/fs1</value> </property> <property> <name>fs.trash.interval</name> <value>1440</value> </property> </configuration> |

hadoop.tmp.dir:默认namenode元数据与节点数据块都存储在该配置路径下

5) yarn-site.xml

|

<configuration> <property> <description>The hostname of the RM.</description> <name>yarn.resourcemanager.hostname</name> <value>master</value> </property> <property> <name>yarn.nodemanager.aux-services</name> <value>mapreduce_shuffle</value> </property> <property> <name>yarn.log-aggregation-enable</name> <value>true</value> </property> </configuration> |

6) slaves

配置从节点

|

slave1 slave2 slave3 |

5. 格式化NameNode

|

[root@master hadoop]# hdfs namenode -format 17/04/28 23:31:31 INFO namenode.NameNode: STARTUP_MSG: /********************************************************** 。。。 17/04/28 23:43:26 INFO util.GSet: 0.029999999329447746% max memory 889 MB = 273.1 KB 17/04/28 23:43:26 INFO util.GSet: capacity = 2^15 = 32768 entries Re-format filesystem in Storage Directory /hdfs/fs1/dfs/name ? (Y or N) y 17/04/28 23:43:31 INFO namenode.FSImage: Allocated new BlockPoolId: BP-96998263-192.168.211.132-1493394211008 17/04/28 23:43:31 INFO common.Storage: Storage directory /hdfs/fs1/dfs/name has been successfully formatted. 17/04/28 23:43:31 INFO namenode.FSImageFormatProtobuf: Saving image file /hdfs/fs1/dfs/name/current/fsimage.ckpt_0000000000000000000 using no compression 17/04/28 23:43:31 INFO namenode.FSImageFormatProtobuf: Image file /hdfs/fs1/dfs/name/current/fsimage.ckpt_0000000000000000000 of size 351 bytes saved in 0 seconds. 17/04/28 23:43:31 INFO namenode.NNStorageRetentionManager: Going to retain 1 images with txid >= 0 17/04/28 23:43:31 INFO util.ExitUtil: Exiting with status 0 17/04/28 23:43:31 INFO namenode.NameNode: SHUTDOWN_MSG: /************************************************************ SHUTDOWN_MSG: Shutting down NameNode at master/192.168.211.132 ************************************************************/ |

6. 启动

|

[root@master sbin]# ./start-all.sh This script is Deprecated. Instead use start-dfs.sh and start-yarn.sh Starting namenodes on [master] master: starting namenode, logging to /usr/program/hadoop/logs/hadoop-root-namenode-master.out slave1: starting datanode, logging to /usr/program/hadoop/logs/hadoop-root-datanode-slave1.out slave2: starting datanode, logging to /usr/program/hadoop/logs/hadoop-root-datanode-slave2.out slave3: starting datanode, logging to /usr/program/hadoop/logs/hadoop-root-datanode-slave3.out Starting secondary namenodes [0.0.0.0] 0.0.0.0: starting secondarynamenode, logging to /usr/program/hadoop/logs/hadoop-root-secondarynamenode-master.out starting yarn daemons starting resourcemanager, logging to /usr/program/hadoop/logs/yarn-root-resourcemanager-master.out slave2: starting nodemanager, logging to /usr/program/hadoop/logs/yarn-root-nodemanager-slave2.out slave3: starting nodemanager, logging to /usr/program/hadoop/logs/yarn-root-nodemanager-slave3.out slave1: starting nodemanager, logging to /usr/program/hadoop/logs/yarn-root-nodemanager-slave1.out |

7. 查看运行状态

|

[root@master sbin]# jps 6624 ResourceManager 6888 Jps 6458 SecondaryNameNode 6254 NameNode [root@master hadoop]# hdfs dfsadmin -report Configured Capacity: 147757621248 (137.61 GB) Present Capacity: 125708881920 (117.08 GB) DFS Remaining: 125708795904 (117.08 GB) DFS Used: 86016 (84 KB) DFS Used%: 0.00% Under replicated blocks: 0 Blocks with corrupt replicas: 0 Missing blocks: 0 Missing blocks (with replication factor 1): 0 ------------------------------------------------- Live datanodes (3): Name: 192.168.211.135:50010 (slave3) Hostname: slave3 Decommission Status : Normal Configured Capacity: 49252540416 (45.87 GB) DFS Used: 28672 (28 KB) Non DFS Used: 7345811456 (6.84 GB) DFS Remaining: 41906700288 (39.03 GB) DFS Used%: 0.00% DFS Remaining%: 85.09% Configured Cache Capacity: 0 (0 B) Cache Used: 0 (0 B) |

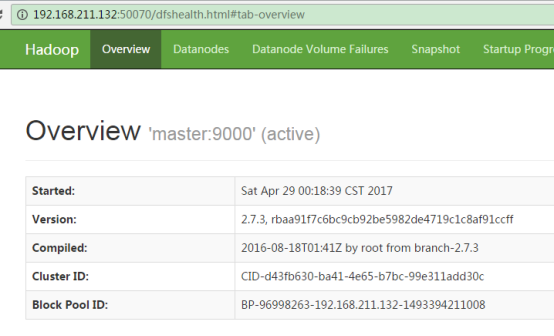

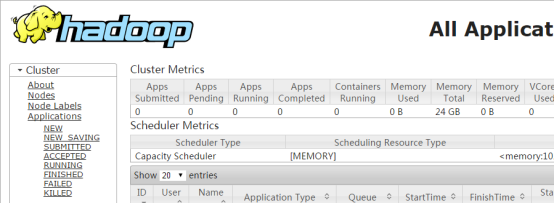

8. 浏览器查看

http://192.168.211.132:50070/dfshealth.html#tab-overview

http://192.168.211.132:8088/cluster

评论

发表评论

|

|

|